Artificial Intelligence (AI) has transformed industries by automating processes and enhancing decision-making. Among the significant advancements driving AI’s progress is deep learning, which has unlocked unparalleled capabilities in image recognition, natural language processing, and more. However, deep learning methods often operate as “black boxes,” making it difficult to understand how decisions are made. This lack of transparency poses critical challenges, particularly in high-stakes fields like business, medicine, and finance, where trust and accountability are paramount.

Explainable AI (XAI) has emerged as a solution to address these challenges by providing clarity and insights into AI models, their predictions, and potential biases. XAI not only describes how AI models function but also assesses their accuracy, fairness, and transparency. By bridging the gap between AI’s complexity and human understanding, XAI plays a crucial role in building trust and confidence in AI systems deployed in production. Moreover, it encourages organizations to adopt a responsible approach to AI development, ensuring that these systems are ethical, accountable, and aligned with regulatory requirements.

In this article, we will explore the concept of Explainable AI, understand why it matters, and examine how it is shaping the future of trustworthy AI.

What is Explainable AI?

Explainable AI (XAI) refers to a set of techniques and methodologies designed to make AI decision-making processes transparent and understandable. Unlike traditional AI, where decisions often emerge from "black-box" models, XAI emphasizes clarity by explaining how and why specific outcomes are reached. For example, while a traditional AI model might recommend a loan approval, XAI can provide the reasoning—such as the applicant's credit score, income level, and repayment history. This transparency is critical for fostering trust and enabling stakeholders to challenge or refine AI-driven outcomes.

Need for XAI

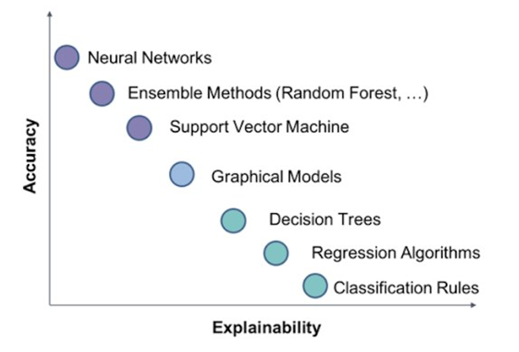

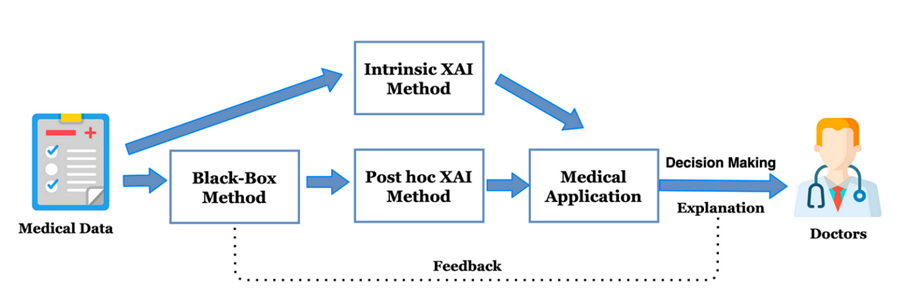

As AI models become more complex, their ability to capture intricate patterns and relationships in data improves, often resulting in higher accuracy. However, this increased complexity comes at a cost—reduced interpretability. Advanced models like deep neural networks operate as black boxes, making it challenging to understand how they arrive at specific predictions. This lack of transparency is especially problematic in fields like healthcare, finance, and law, where the consequences of AI-driven decisions can be significant and require justification. For instance, a doctor needs to understand why an AI system recommends a particular diagnosis, or a financial institution must justify why a loan was denied to a customer. The inability to explain these decisions can lead to mistrust, legal challenges, and even biased outcomes. This is where XAI steps in—to bridge the gap between accuracy and interpretability.

Ref: https://link.springer.com/chapter/10.1007/978-3-030-49065-2_27

Why Explainable AI Matters

Explainable AI (XAI) is essential because it addresses critical issues related to transparency, accountability, and trust in AI systems. Here’s why it matters:

Transparency: XAI allows users to understand the decision-making processes of AI models, offering clarity into how inputs lead to outputs. This is vital for gaining stakeholder trust.

Bias Mitigation: By revealing how decisions are made, XAI helps identify and reduce potential biases in models, ensuring fairer outcomes.

Regulatory Compliance: Many industries, such as finance and healthcare, are subject to strict regulations requiring decision-making processes to be explainable. XAI ensures adherence to these standards.

Accountability: Organizations using AI systems must be able to justify and stand behind their decisions. XAI provides the necessary tools to ensure accountability.

Improved User Trust: Users are more likely to rely on AI systems when they understand how and why decisions are made, fostering confidence in AI-powered solutions.

In summary, the need for XAI arises from the increasing complexity of AI models and their opaque nature, while its importance lies in ensuring AI systems are transparent, fair, and aligned with ethical and regulatory standards. Together, these aspects make XAI a cornerstone for the responsible deployment of AI technologies.

XAI vs. Traditional AI

Artificial Intelligence (AI) has proven to be a transformative force across industries, but one of its major limitations lies in its opacity—particularly with complex machine learning models such as deep neural networks. This is where Explainable AI (XAI) diverges, offering a level of transparency and interpretability that traditional AI often lacks. Below is a detailed comparison of AI and XAI to better understand their roles, limitations, and advantages.

The key distinction between AI and XAI lies in their approach to decision-making. While traditional AI prioritizes accuracy, XAI balances accuracy with interpretability, enabling users to trust and rely on AI systems with confidence. As AI continues to permeate critical sectors, adopting explainable methodologies is not just a preference but a necessity for ethical, accountable, and transparent AI deployment.

Methods of Explainable AI (XAI)

XAI employs several techniques to make machine learning models and predictions more transparent and interpretable. Here’s a detailed overview of the most widely used methods:

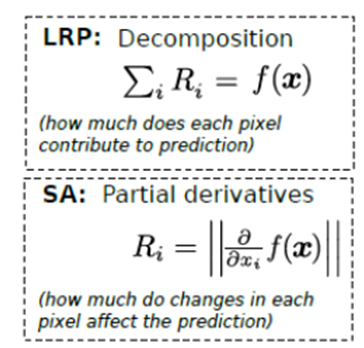

Sensitivity Analysis (SA)

Sensitivity Analysis explains the predictions of deep learning models by evaluating how changes in input features affect the model's output.

Process: The relevance of each input feature is determined by calculating its local sensitivity, which is the partial derivative of the function f(x) with respect to each input variable xi.

Outcome: Features with higher sensitivity values are considered more relevant to the model’s prediction.

Limitation: While it highlights feature importance, SA does not explain the actual function value f(x) or provide a complete picture of the model’s behavior.

For example, in a model predicting housing prices, SA might reveal that features like location and square footage have the highest impact on price fluctuations.

Layer-Wise Relevance Propagation (LRP)

LRP explains model predictions by redistributing the output f(x) backward through the network to assign relevance scores Ri to each input feature.

Process: Relevance is propagated layer by layer using redistribution rules, ensuring that no artificial relevance is added or lost during the process.

Outcome: The method identifies specific input features that contribute most to the prediction.

Applications: LRP is widely used in tasks like image classification, text document classification, and video action recognition.

For example, in image recognition, LRP might highlight which parts of an image (e.g., edges, textures) contributed to identifying a dog in the picture.

Ref: https://link.springer.com/chapter/10.1007/978-3-030-32236-6_51

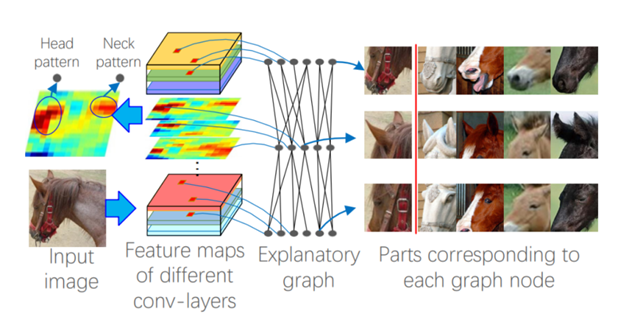

Explanatory Graph

Explanatory graphs visualize the internal workings of a convolutional neural network (CNN).

Structure: Each layer of the graph corresponds to a CNN layer, with nodes representing object parts derived from CNN filter responses.

Visualization: Lower layers represent smaller object parts, while higher layers represent larger parts. Edges in the graph indicate relationships between parts.

Outcome: This method helps users understand how CNNs identify and relate different parts of an object, improving interpretability.

For example, in object detection, an explanatory graph might show how a CNN recognizes a car by detecting its wheels, windows, and body shape.

Ref: https://link.springer.com/chapter/10.1007/978-3-030-32236-6_51

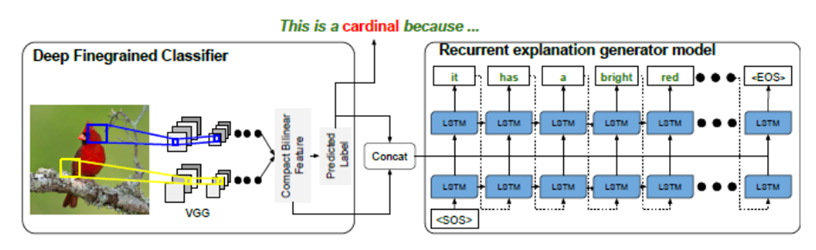

Text Explanations

This approach provides detailed visual and textual explanations for image classifications.

Process: Visual features are captured through a deep recognition pipeline, and an LSTM (Long Short-Term Memory) network generates descriptive explanations that link these features to the predicted class.

Outcome: Explanations are both image-relevant and class-relevant.

Example: A model classifies a bird as a western grebe with an explanation like, “This is a western grebe because it has a long white neck, a pointy yellow beak, and a red eye,” differentiating it from similar birds.

Ref: https://link.springer.com/chapter/10.1007/978-3-030-32236-6_51

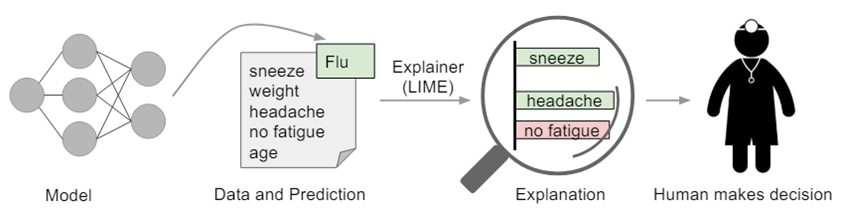

LIME – Local Interpretable Model-Agnostic Explanations

LIME explains individual predictions by approximating the model’s behavior locally with a simpler, interpretable model.

Process: The complex model is treated as a black box, and LIME creates a surrogate model to interpret predictions for a specific instance.

Strengths:

Explains individual predictions without requiring access to the internal workings of the model.

Helps identify local features influencing the decision.

Limitation: Local explanations may not generalize to the entire dataset.

For example, LIME can explain why a specific applicant was denied a loan based on features like income and credit history while ignoring global model behavior for other applicants.

Ref: https://arxiv.org/pdf/1602.04938

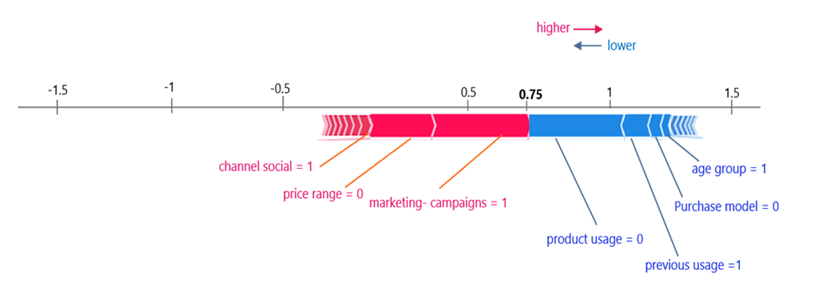

SHAP (SHapley Additive exPlanations)

SHAP assigns importance scores to features, showing their contributions to a specific prediction.

Inspired by Game Theory: SHAP uses the Shapley value to fairly distribute the prediction outcome among input features.

Process: SHAP evaluates all possible combinations of features to calculate each feature’s impact.

Visualization: SHAP force plots show the base value (average model prediction) and how each feature (positive or negative) pushes the prediction away from this base value.

For example, in a diabetes prediction model, SHAP might show that high glucose levels and BMI significantly contribute to a high-risk prediction, while other features like age have smaller impacts.

Ref: https://github.com/shap/shap

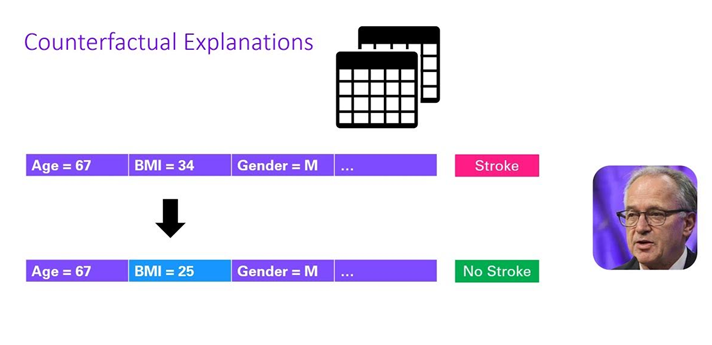

Counterfactual Explanations

Counterfactual explanations provide insights by showing how altering input features can lead to different outcomes.

Process: Generate hypothetical scenarios where specific feature changes result in a different prediction.

Outcome: Helps users understand what changes could lead to a favorable outcome.

Example: In a heart attack prediction model, a patient with an 80% risk could be advised that reducing BMI to 25 would lower the risk to 30%.

Ref: https://www.youtube.com/watch?v=UUZxRct8rIk

These XAI methods serve different purposes, from understanding individual predictions to visualizing model behavior and uncovering hidden biases. By leveraging these techniques, organizations can build AI systems that are more transparent, trustworthy, and aligned with ethical and regulatory standards.

Applications of Explainable AI (XAI)

Explainable AI (XAI) has a wide range of applications across industries, providing transparency and trust in decision-making systems where understanding the rationale behind AI-driven outputs is critical.

Healthcare

In healthcare, explainable AI is vital for interpreting model recommendations to ensure trust and compliance with regulations. By using techniques such as feature importance analysis and decision tree visualizations, clinicians can validate AI-driven diagnostic predictions and treatment recommendations. This transparency not only enhances patient safety but also enables personalized treatments, ultimately improving diagnostic accuracy and fostering better patient outcomes.

Ref: https://www.mdpi.com/2075-4418/12/2/237

Automobile

The automobile industry, particularly in the development of autonomous vehicles, greatly benefits from explainability. Autonomous driving systems carry high stakes, where even minor errors can lead to severe consequences. XAI enables developers and engineers to understand the limitations and capabilities of systems like self-driving algorithms, braking mechanisms, and parking aids. By identifying and addressing flaws before deployment, XAI ensures bias mitigation and enhances the safety and reliability of autonomous driving technology.

Ref: https://arxiv.org/html/2112.11561v5

Insurance

In the insurance sector, XAI has revolutionized the way insurers utilize AI systems. By enabling transparency in model outputs, insurers can trust, understand, and audit their systems to enhance operations. Explainable AI has been instrumental in improving customer acquisition and quotation conversion rates, increasing productivity, and reducing claims rates and fraudulent activities. This has resulted in more efficient processes and greater customer satisfaction, establishing XAI as a game-changer for the industry.

Defence

The defence sector relies on XAI to enhance operational transparency and decision-making. AI systems in military applications, such as intelligence analysis, autonomous vehicles, and strategic planning, are made more effective and trustworthy through explainability. By providing insights into the reasoning behind AI recommendations, XAI helps commanders and policymakers make informed decisions in complex military scenarios, fostering confidence and clarity in high-stakes environments.

Energy

In the energy industry, XAI plays a critical role in optimizing operations and resource management. By explaining insights from AI models, such as predicting energy demand patterns, optimizing grid stability, and integrating renewable energy sources, XAI enables informed decision-making. Energy companies and regulators can better understand recommendations on resource allocation, maintenance scheduling, and strategic investments, ensuring sustainable energy management and efficient operations.

Conclusion:

As AI adoption grows, so does the importance of explainability. Advances in XAI tools and frameworks will enhance our ability to interpret even the most complex models. By aligning XAI with ethical AI practices, organizations can ensure their AI systems remain fair, transparent, and accountable. Explainable AI is more than just a technological advancement—it's a fundamental shift toward responsible AI adoption. By prioritizing transparency and trust, organizations can unlock AI's full potential while addressing ethical and societal concerns. As we move forward, the question is not just how accurate AI can be, but how well we can explain its decisions to those it impacts.